One challenge I’ve been facing while learning Japanese is figuring out the correct pronunciation of random kanji. There are plenty of dictionaries out there, but kanji often have multiple possible readings, and as a beginner, manually looking them up can be time-consuming.

Some might argue that consulting a dictionary is beneficial for reinforcing memory. However, I find it disruptive when I encounter parts of a sentence I can’t read, and looking them up breaks my flow of attempting to build a sense of speech for the language.

Because of this, I searched for tools that could quickly annotate kanji on a webpage—and found nothing that met two specific criteria:

- Well-integrated with the browser: The tool should allow quick lookups with minimal user input (i.e., no copying and pasting).

- Accurate to the context: It must determine the correct pronunciation in context.

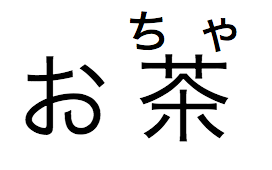

To solve this, I created a personal browser extension that uses a large language model to figure out the best context-driven readings, and I’m quite happy with the results:

I’m not open-sourcing this project because I plan to extend its features into a more versatile language-learning tool sometime and it may become a commercial project eventually.

But here are some technical details during the implementation that I found fascinating, if they interest you:

Okurigana’s

For those unfamiliar with Japanese, okurigana are the kana (typically hiragana) that follow a kanji to complete a word’s spelling and show its grammatical function. These kana often indicate verb conjugations, adjective endings, and other nuances that help readers identify the correct reading and meaning of the kanji in context.

A common pitfall for automated systems is correctly separating the kanji’s “core” reading from the trailing okurigana. For instance:

- In the word 向かう (mu-ka-u), the kanji 向 should be annotated as 「む」(mu), and the okurigana is 「かう」(ka-u).

- However, if the word is written as 向う (which sometimes happens in less formal or stylized text), then the kanji takes 「むか」(mu-ka), and the okurigana is 「う」(u) instead.

Notice how the total reading (むかう) remains the same, but the division between kanji and okurigana changes depending on how many kana follow the kanji. Humans do this “look back and check” step automatically when reading: we see the kanji, look at the trailing kana, and mentally separate them. A simpler language model without a proper reasoning phase often doesn’t “look back”, so it may take the entire sequence “むかう” (mu-ka-u) for the kanji, missing the fact that part of that reading should not be labeled above the kanji since it belongs to the okurigana.

Larger reasoning models handle this much better because they essentially replicate that human-like “reconfirmation” process—first identifying the kanji’s full reading with the help of the okurigana, then keeping only the ones that aren’t in the okurigana.

This difference in performance highlights a major rule to follow when implementing anything LLM-powered: if you’re using a smaller model due to cost or speed, then you should be very careful with tasks that require any level of implicit reflection, because smaller models usually don’t do that.

Distillation

This task is a perfect candidate for model distillation:

- Larger models are more accurate, but they’re slower and more expensive to run.

- Smaller models are faster and cheaper, but less accurate.

For an extension that needs almost instant annotations, waiting over 10 seconds per sentence is unacceptable. On top of that, using high-end models (like OpenAI’s o1 model) can cost up to 30–50 cents per sentence, which quickly becomes prohibitive.

Yet, in most modern text, determining a kanji’s reading doesn’t require extremely complex reasoning. A native Japanese speaker can figure it out almost instantly (except in archaic or highly specialized contexts). This suggests that once a model is properly trained, it shouldn’t need to think through multiple logical steps for common words.

By using a larger model to generate accurate annotations (though slowly and at high cost) and then “teaching” those results to a smaller model—a process known as distillation—I achieved excellent results. With only 100 examples from the larger model, the fine-tuned smaller model ended up 90%–95% as accurate while running 99% cheaper and about 80% faster.

Structured Output

To easily feed the result to the front-end for annotation, I utilized OpenAI’s structured output feature.

Not only does it saves costs by cutting all unnecessary output tokens, it ensures that the front-end receives a ready-to-process data structure, which is quite essential for an application like this one where the LLM output will be used by another part of the program instead of being consumed directly by the user.

Segmentation and Streaming

Despite fine-tuning the smaller model, the time required to annotate sentences can still vary unpredictably. One sentence might be annotated in under a second, while another of similar length and complexity might take 3–5 seconds. Since large language models are often “black boxes,” we can’t always explain these discrepancies.

To provide a smoother user experience, I implemented two techniques:

- Splitting text into smaller pieces: Shorter inputs usually process faster. When a user sends a block of text, I break it into smaller segments and process them asynchronously. This way, total runtime is capped by the “slowest” small task.

- Streaming: As each segment is annotated, results are returned immediately (as seen in the demo video). This acts like a progress bar, so the user sees results coming in continuously rather than waiting for everything at once.

Interestingly, segmenting text also improves accuracy, since the model is less prone to errors with shorter inputs.

Possible Improvements

- Okurigana Accuracy: The fine-tuned smaller model still occasionally mishandles okurigana. Including more diverse examples of okurigana during the distillation process could further reduce these errors.

- Refined Segmentation Logic: Right now, I split text using punctuation. Certain authors write very long, punctuation-light sentences, which can lead to slower processing and more mistakes. A better segmentation strategy might help, though we risk losing context if we slice sentences too aggressively.

- Local Model: Hosting the model locally, if feasible, would cut down on both latency and costs. This could be essential as the tool evolves or gains more users.

Random Thoughts

I’m extremely satisfied with how this personal build turned out. It integrates seamlessly with my browser, annotating Japanese text in near-real time and freeing me from the constant dictionary lookups that disrupt my reading flow. There’s also a great deal of potential for refining and expanding the system, and I’m excited to see just how far these techniques can be stretched.

Looking more broadly, I believe language-learning app developers should be on high alert: tools are emerging that can track a learner’s progress, generate fresh, personalized examples, and even conduct interactive practice sessions in the target language—with reading aids dynamically tailored to the learner’s current level. Software that relies exclusively on a hard-coded knowledge base and fixed problem sets is on the verge of becoming obsolete. Before long, apps like Duolingo may feel like relics of a previous century, as more adaptive and intelligent approaches to language learning take center stage.

This future is coming sooner than many expect, and it’s an exciting time to be exploring innovations in language education.