This optional chapter discusses the current (2025) state of VR hardware, their capabilities, design conventions and limitations.

Features

Let’s explore the basic features offered by modern VR HMDs and get a sense of what we will be working with.

Motion Tracking

A commercial, consumer-grade VR HMD1 typically comes with two motion controllers, one for each hand. Both the HMD and controllers provide 6DOF (six degrees of freedom) movement tracking.

As of 2025, the most widely used tracking solution is computer-vision-based, relying on cameras integrated into the headset’s surface. These cameras, combined with gyroscopes built into the device, provide real-time pose2 data as well as motion data (velocity, acceleration, angular velocity, etc.).

Popular HMDs that utilize this approach include:

- HP Reverb G2 (2020)

- Oculus Quest 2 (2020)

- Meta Quest 3 (2023)

- PlayStation VR2 (2023)

Apple’s Vision Pro (2024), though not marketed as a VR headset and doesn’t come with controllers, also employs this method.

The Meta Quest Pro introduced an additional enhancement: cameras embedded in the controllers themselves to improve tracking quality, particularly when the controllers move outside the HMD’s line of sight. However, this was an expensive solution that has not been widely adopted.

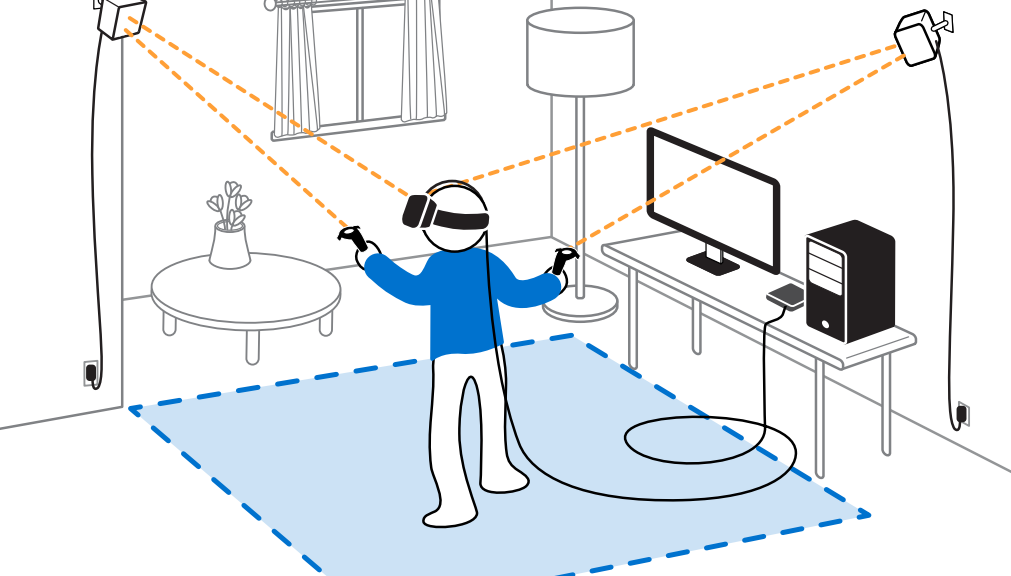

The other tracking method still in use today is SteamVR base station tracking3, which is employed by SteamVR-compatible headsets. Some of the more recent models using this system include:

- Bigscreen Beyond (2023)

- Valve Index (2019)

- Pimax Vision 8KX (2019)

- HTC Vive Pro 2 (2021)

Despite its higher cost, complex setup, and requirement for a stationary play space, SteamVR base station tracking remains relevant. This system requires mounting two or more4 base stations in opposite corners of the play area, where they emit infrared lasers to track devices with sub-millimeter precision. Unlike headset-camera-based tracking, this method eliminates occlusion issues—controllers remain tracked even when outside the headset’s field of view.

A key advantage of this system is support for full-body tracking (FBT). External trackers, such as HTC Vive Trackers or Tundra Trackers, can be attached to the body, enabling precise limb and torso tracking. This makes SteamVR tracking the preferred choice for high-fidelity applications like motion capture and VRChat, where full-body tracking is extensively used.

However, full-body tracking remains out of reach for most VR players due to the complexity and cost of the setup, as well as limited support from games and applications. While camera-based full-body tracking is (reportedly) in active development, it is not yet a viable alternative.

For this reason, this book will assume that players do not have access to full-body tracking when developing features.

Eye Tracking

Some headsets come equipped with eye-tracking capabilities, which allow the hardware to detect and report where the player is looking on the screen. The accuracy and responsiveness of this technology have improved significantly, making it increasingly viable for various applications.

From a software development perspective, eye tracking can be used for:

- Animating the player’s avatar eyes to create more natural and realistic character expressions.

- Enhancing UI and gameplay interactions, as seen in games such as Horizon Call of the Mountain and Synapse.

- Enabling eye-tracked foveated rendering, which improves performance by rendering high-detail graphics only where the player is looking.

Our book may explore these topics in the future. However, similar to FBT, eye tracking is an expensive feature that most headsets lack. We consider it a low-priority subject.

Connectivity and Standalone Mode

VR HMDs can be broadly categorized into two types: standalone and PCVR-only.

Standalone HMDs, such as the Meta Quest series and Pico devices, typically feature ARM chips running customized versions of Android. Games are compiled to run directly on these headsets.

PCVR-only HMDs lack built-in processing hardware and low-level software for running applications independently. Instead, they must be connected to a PC—either via cables5 or wireless transmitters. The game runs on the PC, and the images are streamed to the HMD, while the headset sends back user input, including tracking data and button interactions.

Comparison Table: Standalone vs. PCVR-only HMDs

| Standalone | PCVR-only | |

|---|---|---|

| Price for a complete setup | Lower | Higher |

| Wireless support | Yes | Usually not supported |

| PCVR capability | Yes, via WiFi or wired connection | Yes |

| Visual fidelity | Limited by mobile chip performance | Higher, powered by a desktop GPU |

Inputs

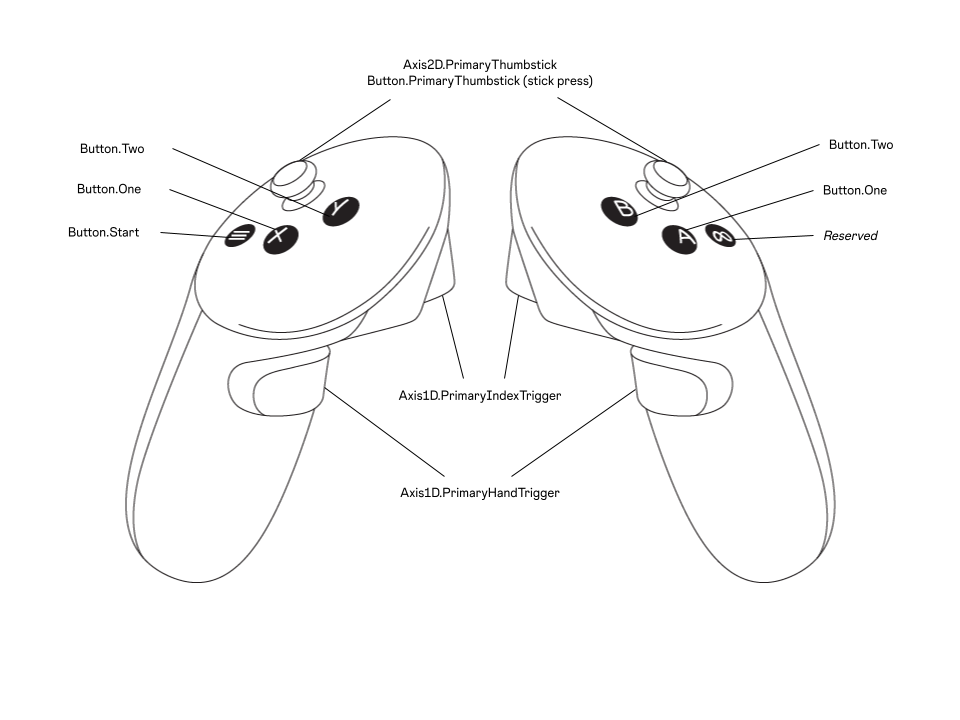

Let’s take a closer look at the standard inputs on a typical pair of motion controllers, using the Meta Quest 3 controllers as an example.

There is no strict industry standard for motion controller button layouts, but most modern devices follow a common design:

- Two round thumb buttons on each controller for in-app interactions.

- Two additional thumb buttons that are harder to reach or press (being concave and/or placed in a less accessible position).

- One is used for less frequent in-app actions, such as opening the in-game menu.

- The other is usually reserved (meaning developers cannot reassign its function) and is used for system-level actions like opening the system menu or taking screenshots.

- One index trigger per controller, typically supporting linear input.

- One hand trigger per controller (usually located under the middle fingers when gripping the controller), also supporting linear input.6

- One thumbstick per controller, providing 2D input and usually clickable. Some controllers replace or supplement it with trackpads.

That’s pretty much it! If you compare a pair of VR motion controllers to a traditional game controller, the key differences are:

- The four primary buttons are split between two hands rather than being grouped on one side.

- The absence of a D-pad.

Limitations

This section covers the main hardware limitations to consider when developing for VR headsets. Some of the topics discussed below will be examined in greater detail in later chapters.

Field-of-View (FoV)

The vertical field of view on a VR HMD typically ranges from 90 to 105 degrees, while the horizontal field of view is usually between 100 and 115 degrees.

Although some headsets offer a significantly wider FoV, they often come with trade-offs such as distortion, reduced edge-to-edge clarity, or a bulkier and heavier form factor.

As developers, we should keep in mind that a user’s FoV in VR is narrower than in real life. To ensure a comfortable experience, avoid placing important graphical elements outside the user’s view.

Pixel Density

Covering a VR user’s full vision while maintaining a respectable visual fidelity of the image is a complex challenge from a hardware perspective. A desktop monitor, by comparison, covers a much smaller portion of the user’s field of view. This means that the same number of pixels appears sharper on a monitor than inside a VR headset.

While newer HMDs offer improved clarity and continue to evolve, it is crucial to consider that distant objects and small text may not be as legible in VR as they appear on a monitor during development. Developers should account for this limitation to ensure a smooth and accessible user experience.

Edge-to-Edge Clarity

Many popular HMDs use Fresnel lenses rather than pancake lenses. As a result, the edges of the display are often significantly blurrier than the center. This effect is even more pronounced if the headset is not well-fitted, as slight movements can cause the user’s eyes to misalign with the lens’s “sweet spots,” further intensifying the blurring.

This serves as a reminder to avoid placing important elements near the edges of the user’s field of view. Even if an element remains visible, text or fine details may be unreadable due to the reduced clarity at the periphery.

Vergence-Accommodation Conflict

The vast majority of VR HMDs have fixed-focus lenses, typically set to a focal distance of around 2 meters. In the real world, we perceive depth using two key mechanisms:

- Vergence – the inward movement of the eyes as objects come closer.

- Accommodation – the adjustment of the eye’s lens shape to focus on objects at different distances.

In VR, when a virtual object moves close to the player’s face, the offset between the left and right eye images signals to the brain that the object is near. However, when the eyes attempt to adjust their focus to this perceived depth, the object appears blurry—because the light is actually coming from the headset’s fixed focal distance of around 2 meters.7

This means that game mechanics or app interactions should not rely heavily on objects appearing extremely close to the user’s face, as they will not be seen clearly.

Controller Tracking Loss

The camera-based tracking approach for controller motion has improved significantly, with modern algorithms effectively maintaining tracking even when controllers briefly move out of the HMD’s view—for example, when a player places a controller behind their back or under a table.

However, if controllers remain out of sight for an extended period, tracking quality can deteriorate significantly. Applications should not be designed with the assumption that a user’s hands will frequently be outside their front view.

Lack of Tactile Feedback

Although motion controllers are typically equipped with vibration or haptic components, they still do not provide a true sense of touching objects. This limitation has several implications:

- Stationary objects must be carefully handled. Since there is no physical resistance in VR, players can unintentionally move their bodies through virtual objects. This can lead to confusion, unintuitive interactions, or even unexpected program behavior—such as the user accidentally falling outside the scene.

- Grasped objects have no weight. If object weight is important in your application, you will need to implement alternative methods to simulate it.

- Designing objects that require two-handed interaction is challenging.

This is a complex topic that will be explored in depth in later chapters.

Locomotion Problems

Ideally, a user’s virtual representation would mirror their real-world movements exactly, allowing for natural interaction with the virtual world. However, in practice, this assumption is not viable due to several limitations:

- The virtual world may have no boundaries, but the player’s physical space always does.

- Some players may prefer—or need—to use the application while seated.

- Certain movements are physically impossible, such as performing a double-jump8.

Lack of Body Tracking

As previously mentioned, most affordable and widely used HMDs do not include full-body tracking. This means the application has no direct way to determine the user’s chest, waist, legs, or feet positions.

Until camera-based full-body tracking becomes a standard feature, developers must rely on estimation techniques to approximate body movement.

This challenge, along with locomotion design, will be a recurring theme throughout this book.

Hand (Finger) Tracking

While some HMDs can now track finger poses, this feature remains niche and is typically disabled while using controllers. As a result, if your application includes virtual hands, you must carefully manage hand gestures.

This requires a system that dynamically adjusts hand gestures based on the user’s input and interactions—such as where the hand is positioned, what it is holding, and how it interacts with the environment.

This topic will be covered in dedicated chapters.

Flat GUI and HUD

When integrating 2D user interfaces into a VR environment, several challenges arise:

- Since tactile feedback is absent, interacting with virtual “touchscreens” feels unnatural.

- Motion controllers function differently than a computer mouse, requiring alternative interaction methods.

Similarly, designing HUDs for a VR game presents unique difficulties. To ensure HUD elements do not obstruct the user’s view while remaining clearly visible, thoughtful engineering is required.

That’s it for this chapter! I hope the long list of hardware limitations hasn’t discouraged you from developing a user-friendly VR application. The purpose of this book is to help you overcome—or at least mitigate—these challenges.

Next: 1.00 – Basic Tracking